Luma Labs, the artificial intelligence company that previously released the 3D model generator Genie, has entered the world of AI video with the Dream Machine — and it’s impressive.

Demand to try Dream Machine overwhelmed Luma’s servers so much that they had to introduce a queuing system. I waited all night for my requests to become video, but the actual “dreaming” process takes about two minutes after you get to the top of the queue.

Some of the videos shared on social media by early access people looked too good to be true, cherry-picked in a way that you can with existing AI video models to show what they do best – but I I tried it and it’s so good.

While it doesn’t seem to be on the level of Sora, or even as good as Kling, what I’ve seen is one of the best AI video models that understand movement and fast tracking. In one way it’s significantly better than Sora – anyone can use it today.

Each video generation is about five seconds long which is almost twice as long as those from Runway or Pika Labs without extensions and there is evidence of some videos with more than one shot.

What is it like to use Dream Machine?

I created some clips while testing it. One was ready in about three hours, the rest took most of the night. Some of them have questionable blending or blur, but for most parties they capture motion better than any model I’ve tried.

I had them showing walking, dancing and even running. Older models may have people walking backwards, or have a doll zoom in on a dancer who stands still from requests that require that type of movement. Not the Dream Car.

Dream Machine captured the concept of the subject in motion brilliantly without having to specify the area of motion. He was especially good at running. But you have minimal fine-tuning or granular control beyond the requirement.

This may be because it’s a new model, but everything is handled on demand – which the AI automatically improves using its own language model.

This is a technique also used by Ideogram and Leonardo in image generation and helps provide a more descriptive explanation of what you want to see.

It may also be a feature of video models built on transformer diffusion technology rather than direct diffusion. UK-based AI video startup Haiper also says its model works best when you let the request do the work, and Sora is said to be little more than a simple text message with minimal additional controls .

Putting the Dream Machine to the test

I came up with a series of requests to test the Dream Machine. I’ve also tested some of them against existing AI video models to see how they compare, and none of them have reached the level of motion accuracy or realistic physics.

In some cases, I just gave it a simple text message, enabling the upgrade feature. For others I prompted it myself with a longer prompt and in some cases gave it an image I had created in Midjourney.

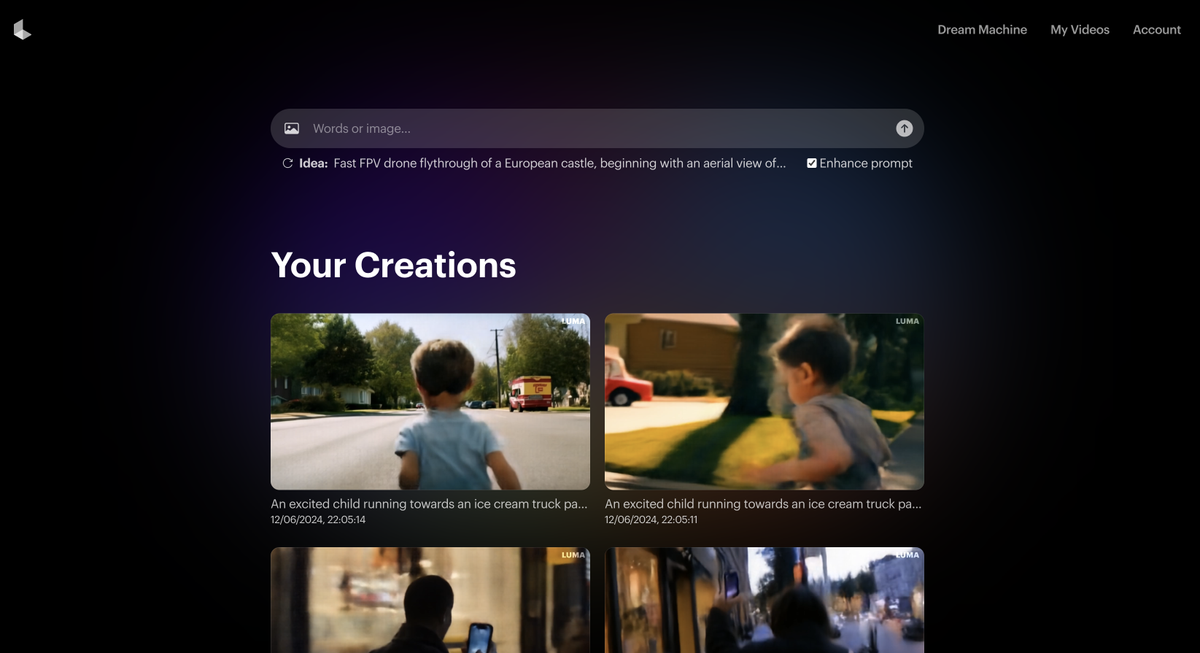

1. Run for an ice cream

For this video I created a longer form and a descriptive prompt. I wanted to create something that looked like it was filmed on a smartphone.

Request: “An excited child running towards an ice cream truck parked on a sunny street. The camera follows closely behind, capturing the back of the child’s head and shoulders, their arms waving with excitement and the truck with brightly colored ice cream approaching The video has a slight bounce to mimic the natural motion of running while holding a phone.”

He created two videos. The first one made it look like the ice cream truck was about to knock the kid over and the arm movements on the kid were a little weird.

The second video was much better. Certainly not realistic and there was impressive motion blur. The video above was of the second shot as it also captured the idea of a slight jump in camera movement.

2. Enter the dinosaur

This time I just gave Dream Machine a simple request and told it not to improve the request, just take what it was given. It actually created two videos that flow from each other, as if they were the first and second movies in one scene.

Claim: “A man discovers a magical camera that brings any photo to life, but chaos ensues when he accidentally takes a photo of a dinosaur.”

While there’s a bit of warping, especially at the edges, the motion of the dinosaur crashing through the room appreciates real-world physics in an interesting way.

3. Telephone on the street

Another complex request again. Specifically where Dream Machine has to take into account light, shaky motion and a relatively complex scene.

Caption: “A person walking along a busy city street at dusk, holding a smartphone vertically. The camera captures their hand as they shake it slightly as they walk, showing views of shop windows, people walking by and the glow of street lights. The video has a slight hand shake to mimic the natural motion of holding a phone.”

This could have gone two ways. The AI could have captured the view from the camera in the person’s hand, or captured the person walking while holding the camera – first person versus third person. He chose a third-person view.

It wasn’t perfect with some warping at the edges, but it was better than I expected given the mismatched elements in my request.

4. Dancing in the dark

Next I started with an image created in Midjourney of a ballerina in silhouette. I’ve tried using this with Runway, Pika Labs, and Stable Video Diffusion and in each case it shows the motion in the photo, but not the motion of the character.

The requirement: “Create an attractive tracking shot of a woman dancing in silhouette against a contrasting, well-lit background. The camera should follow the fluid movements of the ballerina, keeping focus on her silhouette throughout the shot.”

It wasn’t perfect. There was a weird leg warp as it rotated and the arms seem to blend into the fabric, but at least the character moves. This is a constant in the Luma Dream Machine – it’s much better on the go.

5. Cats on the moon

One of the first requests I try with every new AI generating image or video mod is “cats dancing on the moon in a space suit”. It’s weird enough to not have existing video to pull from and complex enough for video to struggle with motion.

My exact Luma Dream Machine request: “A cat in a spacesuit on the moon dancing with a dog.” That was it, no refinement and no description of the type of movement – I left it to AI.

What this request showed is that you need to give the AI some instructions on how to interpret the movement. It didn’t do a bad job, better than the currently available alternative models – but far from perfect.

6. Market visit

Then there was another that started with an image of Midjourney. It was a photo showing a vibrant European food market. Midjourney’s original pitch was: “An ultra-realistic candid smartphone shot of a bustling open-air farmers market in a quaint European town square.”

For the Luma Labs Dream Machine, I simply added the instruction: “Walking through a busy and bustling food market.” No other movement commands or character instructions.

I wish I could be more specific about how the characters should move. It captured the camera movement really well, but resulted in a lot of distortion and blur between the people in the scene. This was one of my first attempts and so I hadn’t tried better techniques to induce the pattern.

7. The end of the chess match

Finally, I decided to give the Luma Dream Machine a full turn. I had been experimenting with another new AI model – the Leonardo Phoenix – which promises impressive levels of fast tracking. So I created a complex AI image request.

Phoenix did a good job, but this was just an image, so I decided to put the same claim on Dream Machine: “A surreal, weathered chessboard floating in a misty void, adorned with brass gears and cogs , where intricate steam chess pieces—including steam robot pawns.”

She ignored almost everything but the chessboard and created this surreal video of the chess pieces disappearing from the bottom of the board as if they were melting. Because of the element of surrealism, I can’t tell if this was intentional or a failure to understand his movement. It looks cool though.

Final thoughts

I just did the following calculation: I got access to the Luma Dream Machine on Saturday night and over 2-3 days of playing with it, I made 633 generations. Among these 633, I think at least 150 were just random tests for fun. So I would estimate I needed about 500… https://t.co/TpMCdDmlxyJune 12, 2024

Luma Labs Dream Machine is another impressive step in AI generating video. It’s likely they’ve leveraged experience in generative 3D modeling to improve motion understanding in video — but it still feels like a gap for true video AI.

Over the past two years, AI image generation has gone from weird, low-resolution renderings of people with multiple fingers and faces that look more like something Edvard Munch might paint than a photograph, to became almost indistinguishable from reality.

AI video is much more complex. Not only should it replicate the realism of a photograph, but have an understanding of real-world physics and how it affects movement – across scenes, people, animals, vehicles and objects.

Right now, I think even the best AI video tools are meant to be used alongside traditional filmmaking rather than replacing it – but we’re approaching what Ashton Kutcher predicts is an era where everyone can make their own feature films.

Luma Labs have created one of the most realistic looking vehicles I’ve seen yet, but it still falls short of what’s needed. I don’t think it’s up to Sora’s level, but I can’t compare it to the videos I’ve made myself using Sora – only the ones I’ve seen from filmmakers and OpenAI itself, and these are likely culled from hundreds of failures.

Abel Art, an avid AI artist who had early access to Dream Machine has created some impressive work. But he said he had to create hundreds of generations for just one minute of video to make it coherent, and once you discard the unusable clips.

His ratio is roughly 500 clips per 1 minute of video, with each clip at around 5 seconds he is hitting 98% of the shots to create the perfect scene.

I suspect the ratio for Pika Labs and Runway is higher, and reports suggest Sora has a similar discard rate, at least from filmmakers who have used it.

Right now, I think even the best AI video tools are meant to be used alongside traditional filmmaking rather than replacing it – but we’re approaching what Ashton Kutcher predicts is an era where everyone can make their own feature films.